Multiplex image alignment

Register images from multiplexed imaging round

Data Organization

├──E:/Multiplex

│ ├── H2202Desc_Layer 1_Ganglia1_Hu.tif

│ ├── H2202Desc_Layer1_Ganglia1_5HT.tif

│ ├── H2202Desc_Layer1_Ganglia1_ChAT.tif

│ ├── H2202Desc_Layer1_Ganglia1_NOS.tif

│ ├── H2202Desc_Layer2_Ganglia1_CGRP.tif

│ ├── H2202Desc_Layer2_Ganglia1_Enk.tif

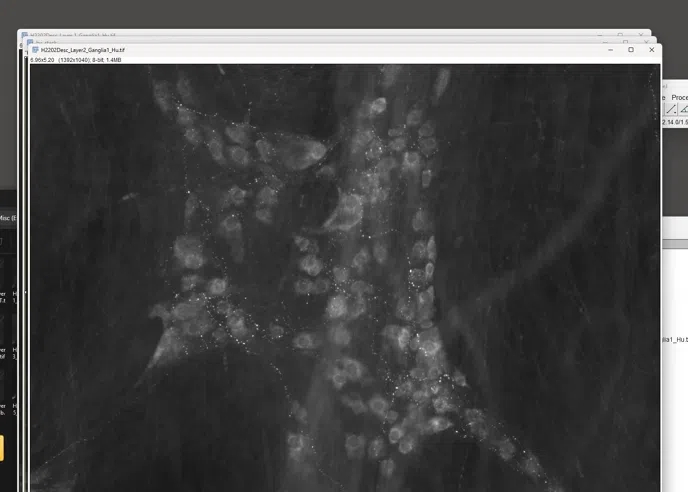

│ ├── H2202Desc_Layer2_Ganglia1_Hu.tif

│ ├── H2202Desc_Layer2_Ganglia1_SP.tif

│ ├── H2202Desc_Layer3_Ganglia1_Hu.tif

│ ├── H2202Desc_Layer3_Ganglia1_Somat.tif

│ ├── H2202Desc_Layer3_Ganglia1_VAChT.tif

│ ├── H2202Desc_Layer4_Ganglia1_Hu.tif

│ ├── H2202Desc_Layer4_Ganglia1_NPY.tif

│ ├── H2202Desc_Layer5_Ganglia1_Calb.tif

│ ├── H2202Desc_Layer5_Ganglia1_Calret.tif

│ ├── H2202Desc_Layer5_Ganglia1_Hu.tif

│ ├── H2202Desc_Layer5_Ganglia1_NF.tif

│ ├── H2202Desc_Layer6_Ganglia1_Hu.tif

│ ├── H2202Desc_Layer6_Ganglia1_VIP.tifAnalysis Steps

Last updated